On AI Memory

ChatGPT's persistent memory feature feels both exciting and terrifying simultaneously. Its descriptions of me were eerily accurate. I won't publicly share much of this information (for example, my salary, how much I have been sleeping lately, my current location, etc.). Yet, without fully realizing the implications, I casually shared it with an AI assistant, unaware it would remember indefinitely.

Keep the privacy issues aside for this post. If you don't think of ChatGPT as a web app with an LLM but as an actual assistant, it makes sense that it would remember all this. A personal assistant who remembers details about your life is way more valuable than an assistant with amnesia.

Many people think that an AI assistant with a memory will lock you in, like streaming services do by offering a personalized experience. It isn't easy to move away from Spotify if you have years of listening history. No other music streaming service could instantly provide you with the same level of music discovery and recommendations. A year ago, I unsubscribed from Spotify, hoping that I could simply use YouTube to listen to music. Every piece of audio content on Spotify must also be on YouTube. The truth is that I still miss Spotify and might resubscribe at some point.

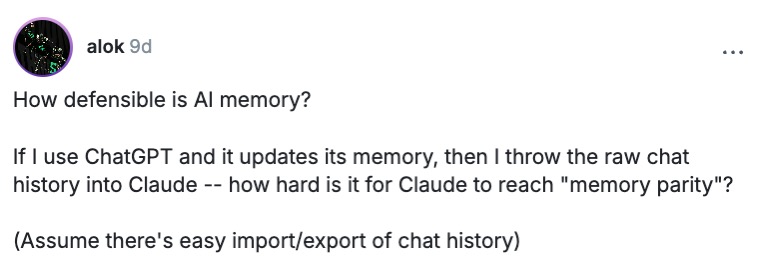

|

|---|

| Alok's question on how on defensible is AI memory |

However, I don't think this is true for AI assistants. The previous generation of machine learning systems were task-specific, such as recommending movies, music, etc. Today's transformer based models can learn anything just from a stream of tokens. Imagine exporting your chat history from ChatGPT to Claude. Now Calude will have the same memories. The lock-in effect is not comparable to what we had in the Web2 services.

It opens up a whole new design space for stateless agents. Imagine an AI assistant that you can spin up on demand, feeding it only the memories you explicitly store and manage yourself. Think of it like entries in a personal journal. Each time you interact, the assistant reads from your journal, provides insights or summaries, and then writes the outcome back into your journal. After your interaction, you shut the assistant down completely. Users can truly own their interactions when AI models can seamlessly import, export, and interpret standardized data formats. This approach lets you retain complete control over your data, prevents you from getting locked into a particular AI assistant provider, and, crucially, it doesn't have to compromise the UX.

Imagine a future with a feed of AI agents, each providing access to specialized tools and the latest AI models. To interact, you’d simply prepare your prompt, provide your personal memory journal, and enjoy a seamless experience. This portability doesn't dilute the AI's effectiveness.

Moreover, specialized, task-focused AI agents could leverage your journal entries for highly contextual interactions. Need a financial planning session? Spin up an agent explicitly trained for the financial domain, temporarily granting it access to relevant sections of your journal. Let the agent update your accounting books. Finished? Shut it down.